Example Scripts¶

This is a collection of example scripts for some of Ursgal’s functionality. The simple example scripts are designed to get familiar with executing engines through Ursgal. Examles of complete workflows can be modified for different needs. Further example scripts can also be found in ~ursgalexample_scripts

Simple Example Scripts¶

Simple example search¶

-

simple_example_search.main()¶ Executes a search with OMSSA, XTandem and MS-GF+ on the BSA1.mzML input_file

- usage:

- ./simple_example_search.py

Note

Myrimatch does not work with this file. To use MSAmanda on unix platforms, please install mono (http://www.mono-project.com/download)

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

import sys

import shutil

def main():

"""

Executes a search with OMSSA, XTandem and MS-GF+ on the BSA1.mzML

input_file

usage:

./simple_example_search.py

Note:

Myrimatch does not work with this file.

To use MSAmanda on unix platforms, please install mono

(http://www.mono-project.com/download)

"""

uc = ursgal.UController(

profile="LTQ XL low res",

params={

"database": os.path.join(os.pardir, "example_data", "BSA.fasta"),

"modifications": [

"M,opt,any,Oxidation", # Met oxidation

"C,fix,any,Carbamidomethyl", # Carbamidomethylation

"*,opt,Prot-N-term,Acetyl", # N-Acteylation

],

# 'peptide_mapper_class_version' : 'UPeptideMapper_v2',

},

)

if sys.maxsize > 2 ** 32:

xtandem = "xtandem_vengeance"

else:

xtandem = "xtandem_sledgehammer"

engine_list = [

"omssa",

xtandem,

"msgfplus_v2016_09_16",

]

mzML_file = os.path.join(

os.pardir, "example_data", "BSA_simple_example_search", "BSA1.mzML"

)

if os.path.exists(mzML_file) is False:

uc.params[

"http_url"

] = "http://sourceforge.net/p/open-ms/code/HEAD/tree/OpenMS/share/OpenMS/examples/BSA/BSA1.mzML?format=raw"

uc.params["http_output_folder"] = os.path.dirname(mzML_file)

uc.fetch_file(

engine="get_http_files_1_0_0",

)

try:

shutil.move("{0}?format=raw".format(mzML_file), mzML_file)

except:

shutil.move("{0}format=raw".format(mzML_file), mzML_file)

unified_file_list = []

for engine in engine_list:

unified_search_result_file = uc.search(

input_file=mzML_file, engine=engine, force=False

)

unified_file_list.append(unified_search_result_file)

uc.visualize(

input_files=unified_file_list,

engine="venndiagram_1_1_0",

)

return

if __name__ == "__main__":

main()

Simple example using combined fdr (or pep)¶

-

simple_combined_fdr_score.main()¶ Executes a search with 3 different search engines on an example file from the data from Barth et al. (The same file that is used in the XTandem version comparison example.)

- usage:

- ./simple_combined_fdr_score.py

This is a simple example script to show how results from multiple search engines can be combined using the Combined FDR Score approach of Jones et al. (2009).

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

def main():

"""

Executes a search with 3 different search engines on an example file from the

data from Barth et al. (The same file that is used in the XTandem version

comparison example.)

usage:

./simple_combined_fdr_score.py

This is a simple example script to show how results from multiple search engines

can be combined using the Combined FDR Score approach of Jones et al. (2009).

"""

engine_list = [

"omssa_2_1_9",

"xtandem_piledriver",

# 'myrimatch_2_1_138',

"msgfplus_v9979",

]

params = {

"database": os.path.join(

os.pardir,

"example_data",

"Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

),

"modifications": [],

"csv_filter_rules": [["PEP", "lte", 0.01], ["Is decoy", "equals", "false"]],

"ftp_url": "ftp.peptideatlas.org",

"ftp_login": "PASS00269",

"ftp_password": "FI4645a",

"ftp_include_ext": [

"JB_FASP_pH8_2-3_28122012.mzML",

],

"ftp_output_folder": os.path.join(

os.pardir, "example_data", "xtandem_version_comparison"

),

"http_url": "https://www.sas.upenn.edu/~sschulze/Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

"http_output_folder": os.path.join(os.pardir, "example_data"),

}

if os.path.exists(params["ftp_output_folder"]) is False:

os.mkdir(params["ftp_output_folder"])

uc = ursgal.UController(profile="LTQ XL low res", params=params)

mzML_file = os.path.join(params["ftp_output_folder"], params["ftp_include_ext"][0])

if os.path.exists(mzML_file) is False:

uc.fetch_file(engine="get_ftp_files_1_0_0")

if os.path.exists(params["database"]) is False:

uc.fetch_file(engine="get_http_files_1_0_0")

validated_files_list = []

for engine in engine_list:

unified_result_file = uc.search(

input_file=mzML_file,

engine=engine,

)

validated_file = uc.validate(

input_file=unified_result_file,

engine="percolator_2_08",

)

validated_files_list.append(validated_file)

combined_results = uc.combine_search_results(

input_files=validated_files_list,

engine="combine_FDR_0_1",

# use combine_pep_1_0_0 for combined PEP :)

)

print("\tCombined results can be found here:")

print(combined_results)

return

if __name__ == "__main__":

main()

Simple de novo search¶

-

simple_de_novo_search.main()¶ Executes a search with Novor and PepNovo on the BSA1.mzML input_file

- usage:

- ./simple_de_novo_search.py

Note

PepNovo currently only works for Linux. Novor needs to be downloaded from http://rapidnovor.com/novor/standalone/ and stored at <ursgal_path>/resources/<platform>/<architecture>/novor_1_1beta

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

import sys

import shutil

def main():

"""

Executes a search with Novor and PepNovo on the BSA1.mzML

input_file

usage:

./simple_de_novo_search.py

Note:

PepNovo currently only works for Linux.

Novor needs to be downloaded from http://rapidnovor.com/novor/standalone/ and

stored at <ursgal_path>/resources/<platform>/<architecture>/novor_1_1beta

"""

uc = ursgal.UController(

profile="LTQ XL low res",

params={

"modifications": [

"M,opt,any,Oxidation", # Met oxidation

"C,fix,any,Carbamidomethyl", # Carbamidomethylation

"*,opt,Prot-N-term,Acetyl", # N-Acteylation

],

},

)

engine_list = [

"novor_1_05",

"pepnovo",

]

mzML_file = os.path.join(

os.pardir, "example_data", "BSA_simple_de_novo_search", "BSA1.mzML"

)

if os.path.exists(mzML_file) is False:

uc.params[

"http_url"

] = "http://sourceforge.net/p/open-ms/code/HEAD/tree/OpenMS/share/OpenMS/examples/BSA/BSA1.mzML?format=raw"

uc.params["http_output_folder"] = os.path.dirname(mzML_file)

uc.fetch_file(

engine="get_http_files_1_0_0",

)

try:

shutil.move("{0}?format=raw".format(mzML_file), mzML_file)

except:

shutil.move("{0}format=raw".format(mzML_file), mzML_file)

unified_file_list = []

for engine in engine_list:

unified_search_result_file = uc.search(

input_file=mzML_file, engine=engine, force=False

)

unified_file_list.append(unified_search_result_file)

uc.visualize(

input_files=unified_file_list,

engine="venndiagram_1_1_0",

)

return

if __name__ == "__main__":

main()

Simple crosslink search¶

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

import sys

def main():

"""

Simple crosslink search using Kojak and an example file from Barth et al.

2014, please note that this is only testing if Kojak works.

The sample represents no crosslink data in particular, but Kojak is used to

map possible disulfide bonds.

Parameters have not been optimized yet, please use this script as a template

to use Kojak. Please note the different approach for executing Percolator.

Note:

Please note that Kojak has to installed manually at the resources folder

under engine-folder (kojak_1_5_3).

Additinally Percolator (2.08) has to be symlinked or copied to

engine-folder 'kojak_percolator_2_08'.

Usage:

./simple_srosslink_search.py <path_2_chlamydomonas_reinhardtii_database>

Note:

The peptide -.GHYLNATAGTC[-34.00]EEMMK.- from Rubisco large subunit is

detected with a predicted disulfide bond site at this position.

This site is reported to have a disulfide bond in the Uniprot database.

The modification (C +32, C -34) was set according to 'Tsai PL, Chen SF,

Huang SY (2013) Mass spectrometry-based strategies for protein disulfide

bond identification. Rev Anal Chem 32: 257–268'

Please use the reference C. reinhardtii (TaxId 3055 ) proteome from

Unipot.

"""

params = {

"database": sys.argv[1],

"ftp_url": "ftp.peptideatlas.org",

"ftp_login": "PASS00269",

"ftp_password": "FI4645a",

"ftp_include_ext": [

"JB_FASP_pH8_2-3_28122012.mzML",

],

"ftp_output_folder": os.path.join(

os.pardir, "example_data", "simple_crosslink_search"

),

"cross_link_definition": ["C C -2 test_if_kojak_runs"],

"mono_link_definition": ["C 32", "C -34"],

"modifications": [

"M,opt,any,Oxidation", # Met oxidation

],

"precursor_min_mass": 500,

"precursor_max_mass": 8000,

"precursor_mass_tolerance_plus": 15,

"precursor_mass_tolerance_minus": 15,

"max_accounted_observed_peaks": 0, # i.e. all

"max_num_mods": 2,

}

if os.path.exists(params["ftp_output_folder"]) is False:

os.mkdir(params["ftp_output_folder"])

uc = ursgal.UController(profile="LTQ XL low res", params=params)

mzML_file = os.path.join(params["ftp_output_folder"], params["ftp_include_ext"][0])

if os.path.exists(mzML_file) is False:

uc.fetch_file(engine="get_ftp_files_1_0_0")

td_database_name = params["database"].replace(".fasta", "_target_decoy.fasta")

if os.path.exists(td_database_name) is False:

uc.execute_misc_engine(

input_files=[params["database"]],

engine="generate_target_decoy_1_0_0",

output_file_name=td_database_name,

)

uc.params["database"] = td_database_name

engine = "kojak_1_5_3"

mgf_file = uc.convert(

input_file=mzML_file,

engine="mzml2mgf",

)

raw_result = uc.search_mgf(

input_file=mgf_file,

engine=engine,

force=False,

)

for extension in uc.unodes[engine]["META_INFO"]["all_extensions"]:

if "perc" not in extension:

continue

file_to_validate = raw_result.replace(

uc.unodes[engine]["META_INFO"]["output_extension"], extension

)

try:

uc.validate(file_to_validate, "kojak_percolator_2_08")

except:

pass

return

if __name__ == "__main__":

main()

Target decoy generation¶

-

target_decoy_generation_example.main()¶ Simple example script how to generate a target decoy database.

Note

By default a ‘shuffled peptide preserving cleavage sites’ database is generated. For this script a ‘reverse protein’ database is generated.

usage:

./target_decoy_generation_example.py

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

def main():

"""

Simple example script how to generate a target decoy database.

Note:

By default a 'shuffled peptide preserving cleavage sites' database is

generated. For this script a 'reverse protein' database is generated.

usage:

./target_decoy_generation_example.py

"""

params = {

"enzyme": "trypsin",

"decoy_generation_mode": "reverse_protein",

}

fasta_database_list = [os.path.join(os.pardir, "example_data", "BSA.fasta")]

uc = ursgal.UController(params=params)

new_target_decoy_db_name = uc.execute_misc_engine(

input_file=fasta_database_list,

engine="generate_target_decoy_1_0_0",

output_file_name="my_BSA_target_decoy.fasta",

)

print("Generated target decoy database: {0}".format(new_target_decoy_db_name))

if __name__ == "__main__":

main()

Convert .raw to .mzML¶

-

convert_raw_to_mzml.main(input_path=None)¶ Convert a .raw file to .mzML using the ThermoRawFileParser. The given argument can be either a single file or a folder containing raw files.

- Usage:

- ./convert_raw_to_mzml.py <raw_file/raw_file_folder>

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

import sys

import glob

def main(input_path=None):

"""

Convert a .raw file to .mzML using the ThermoRawFileParser.

The given argument can be either a single file or a folder

containing raw files.

Usage:

./convert_raw_to_mzml.py <raw_file/raw_file_folder>

"""

R = ursgal.UController()

# Check if single file or folder.

# Collect raw files if folder is given

input_file_list = []

if input_path.lower().endswith(".raw"):

input_file_list.append(input_path)

else:

for raw in glob.glob(os.path.join("{0}".format(input_path), "*.raw")):

input_file_list.append(raw)

# Convert raw file(s)

for raw_file in input_file_list:

mzml_file = R.convert(

input_file=raw_file,

engine="thermo_raw_file_parser_1_1_2",

)

if __name__ == "__main__":

if len(sys.argv) != 2:

print(main.__doc__)

main(input_path=sys.argv[1])

Simple mgf conversion¶

-

simple_mgf_conversion.main()¶ Simple example script to demonstrate conversion for mzML to mgf file conversion

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

import shutil

def main():

"""

Simple example script to demonstrate conversion for mzML to mgf file

conversion

"""

uc = ursgal.UController(

profile="LTQ XL low res",

params={},

)

mzML_file = os.path.join(

os.pardir, "example_data", "simple_mzml_to_mgf_conversion", "BSA1.mzML"

)

if os.path.exists(mzML_file) is False:

uc.params[

"http_url"

] = "http://sourceforge.net/p/open-ms/code/HEAD/tree/OpenMS/share/OpenMS/examples/BSA/BSA1.mzML?format=raw"

uc.params["http_output_folder"] = os.path.dirname(mzML_file)

uc.fetch_file(engine="get_http_files_1_0_0")

try:

shutil.move("{0}?format=raw".format(mzML_file), mzML_file)

except:

shutil.move("{0}format=raw".format(mzML_file), mzML_file)

mgf_file = uc.convert(

input_file=mzML_file, engine="mzml2mgf_1_0_0" # from OpenMS example files

)

if __name__ == "__main__":

print(__doc__)

main()

Mgf conversion loop examples¶

-

mgf_conversion_inside_and_outside_loop.main()¶

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

import shutil

def main():

""""""

R = ursgal.UController(

profile="LTQ XL low res",

params={

"database": os.path.join(os.pardir, "example_data", "BSA.fasta"),

"modifications": [

"M,opt,any,Oxidation", # Met oxidation

"C,fix,any,Carbamidomethyl", # Carbamidomethylation

"*,opt,Prot-N-term,Acetyl", # N-Acteylation[]

],

},

)

engine = "omssa"

output_files = []

mzML_file = os.path.join(

os.pardir, "example_data", "mgf_conversion_example", "BSA1.mzML"

)

if os.path.exists(mzML_file) is False:

R.params[

"http_url"

] = "http://sourceforge.net/p/open-ms/code/HEAD/tree/OpenMS/share/OpenMS/examples/BSA/BSA1.mzML?format=raw"

R.params["http_output_folder"] = os.path.dirname(mzML_file)

R.fetch_file(engine="get_http_files_1_0_0")

try:

shutil.move("{0}?format=raw".format(mzML_file), mzML_file)

except:

shutil.move("{0}format=raw".format(mzML_file), mzML_file)

# First method: Convert to MGF outside of the loop:

# (saves some time cause the MGF conversion is not always re-run)

mgf_file = R.convert(

input_file=mzML_file, engine="mzml2mgf_1_0_0" # from OpenMS example files

)

for prefix in ["10ppm", "20ppm"]:

R.params["prefix"] = prefix

output_file = R.search(

input_file=mgf_file,

engine=engine,

# output_file_name = 'some_userdefined_name'

)

# Second method: Automatically convert to MGF inside the loop:

# (MGF conversion is re-run every time because the prexix changed!)

for prefix in ["5ppm", "15ppm"]:

R.params["prefix"] = prefix

output_file = R.search(

input_file=mzML_file, # from OpenMS example files

engine=engine,

# output_file_name = 'another_fname',

)

output_files.append(output_file)

print("\tOutput files:")

for f in output_files:

print(f)

if __name__ == "__main__":

print(__doc__)

main()

Simple Venn diagram¶

-

simple_venn_example.main()¶ Example for plotting a simple Venn diagram with single ursgal csv files.

- usage:

- ./simple_venn_example.py

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

def main():

"""

Example for plotting a simple Venn diagram with single ursgal csv files.

usage:

./simple_venn_example.py

"""

uc = ursgal.UController(

profile="LTQ XL low res",

params={"visualization_label_positions": {"0": "omssa", "1": "xtandem"}},

)

file_list = [

os.path.join(

os.pardir, "tests", "data", "omssa_2_1_9", "test_BSA1_omssa_2_1_9.csv"

),

os.path.join(

os.pardir,

"tests",

"data",

"xtandem_sledgehammer",

"test_BSA1_xtandem_sledgehammer.csv",

),

]

uc.visualize(

input_files=file_list,

engine="venndiagram_1_1_0",

force=True,

)

return

if __name__ == "__main__":

main()

Sanitize csv¶

-

sanitize_combined_results.main(infile=None)¶ Sanitize an Ursgal result file after combining results from multiple search engines (or single search engine results, with modified parameters)

- usage:

- ./sanitize_combined_results.py <Ursgal_result_file>

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import sys

import os

def main(infile=None):

"""

Sanitize an Ursgal result file after combining results

from multiple search engines (or single search engine results,

with modified parameters)

usage:

./sanitize_combined_results.py <Ursgal_result_file>

"""

mass_spectrometer = "QExactive+"

params = {

"precursor_mass_tolerance_minus": 10,

"precursor_mass_tolerance_plus": 10,

"frag_mass_tolerance": 10,

"frag_mass_tolerance_unit": "ppm",

"-xmx": "32g",

}

uc = ursgal.UController(profile=mass_spectrometer, params=params)

# Parameters need to be adjusted based on the input file

# and the desired type of sanitizing.

# E.g. 'combined PEP' or 'Bayed PEP' can be used for combined PEP results,

# while 'PEP' should be used for results from single engines.

# A minimum difference between the top scoring, conflicting PSMs can be defined

# using the parameters 'score_diff_threshold' and 'threshold_is_log10'

uc.params.update(

{

"validation_score_field": "Bayes PEP",

# 'validation_score_field': 'PEP',

"bigger_scores_better": False,

"num_compared_psms": 25,

"accept_conflicting_psms": False,

"threshold_is_log10": True,

"score_diff_threshold": 0.0,

"psm_defining_colnames": [

"Spectrum Title",

"Sequence",

# 'Modifications',

# 'Charge',

# 'Is decoy',

],

"max_num_psms_per_spec": 1,

# 'preferred_engines': [],

"preferred_engines": [

"msfragger_2_3",

"pipi_1_4_6",

"moda_v1_61",

],

"remove_redundant_psms": False,

}

)

sanitized_combined_results = uc.execute_misc_engine(

input_file=infile,

engine="sanitize_csv",

)

if __name__ == "__main__":

main(

infile=sys.argv[1],

)

Test node execution¶

-

test_node_execution.main()¶ Testscript for executing the test node, which also tests the run time determination function.

- Usage:

- ./test_node_excution.py

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import glob

import os.path

import sys

import tempfile

import time

def main():

"""

Testscript for executing the test node, which also tests the run time

determination function.

Usage:

./test_node_excution.py

"""

uc = ursgal.UController(verbose=True)

temp_int, temp_fpath = tempfile.mkstemp(prefix="ursgal_", suffix=".csv")

temp_fobject = open(temp_fpath, "w")

print("test 1,2,3", file=temp_fobject)

temp_fobject.close()

test_1 = uc.execute_unode(temp_fpath, "_test_node")

test_2 = uc.execute_unode(test_1, "_test_node")

if __name__ == "__main__":

main()

Complete Workflow Scripts¶

Do it all folder wide¶

-

do_it_all_folder_wide.main(folder=None, profile=None, target_decoy_database=None)¶ An example test script to search all mzML files which are present in the specified folder. The search is currently performed on 4 search engines and 2 validation engines.

The machine profile has to be specified as well as the target-decoy database.

usage:

./do_it_all_folder_wide.py <mzML_folder> <profile> <target_decoy_database>Current profiles:

- ‘QExactive+’

- ‘LTQ XL low res’

- ‘LTQ XL high res’

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import sys

import glob

import os

def main(folder=None, profile=None, target_decoy_database=None):

"""

An example test script to search all mzML files which are present in the

specified folder. The search is currently performed on 4 search engines

and 2 validation engines.

The machine profile has to be specified as well as the target-decoy

database.

usage:

./do_it_all_folder_wide.py <mzML_folder> <profile> <target_decoy_database>

Current profiles:

* 'QExactive+'

* 'LTQ XL low res'

* 'LTQ XL high res'

"""

# define folder with mzML_files as sys.argv[1]

mzML_files = []

for mzml in glob.glob(os.path.join("{0}".format(folder), "*.mzML")):

mzML_files.append(mzml)

mass_spectrometer = profile

# We specify all search engines and validation engines that we want to use in a list

# (version numbers might differ on windows or mac):

search_engines = [

"omssa",

"xtandem_vengeance",

"msgfplus_v2016_09_16",

# 'msamanda_1_0_0_6300',

# 'myrimatch_2_1_138',

]

validation_engines = [

"percolator_2_08",

"qvality",

]

# Modifications that should be included in the search

all_mods = [

"C,fix,any,Carbamidomethyl",

"M,opt,any,Oxidation",

# 'N,opt,any,Deamidated',

# 'Q,opt,any,Deamidated',

# 'E,opt,any,Methyl',

# 'K,opt,any,Methyl',

# 'R,opt,any,Methyl',

"*,opt,Prot-N-term,Acetyl",

# 'S,opt,any,Phospho',

# 'T,opt,any,Phospho',

# 'N,opt,any,HexNAc'

]

# Initializing the Ursgal UController class with

# our specified modifications and mass spectrometer

params = {

"database": target_decoy_database,

"modifications": all_mods,

"csv_filter_rules": [

["Is decoy", "equals", "false"],

["PEP", "lte", 0.01],

],

}

uc = ursgal.UController(profile=mass_spectrometer, params=params)

# complete workflow:

# every spectrum file is searched with every search engine,

# results are validated (for each engine seperately),

# validated results are merged and filtered for targets and PEP <= 0.01.

# In the end, all filtered results from all spectrum files are merged

for validation_engine in validation_engines:

result_files = []

for spec_file in mzML_files:

validated_results = []

for search_engine in search_engines:

unified_search_results = uc.search(

input_file=spec_file,

engine=search_engine,

)

validated_csv = uc.validate(

input_file=unified_search_results,

engine=validation_engine,

)

validated_results.append(validated_csv)

validated_results_from_all_engines = uc.execute_misc_engine(

input_file=validated_results,

engine="merge_csvs_1_0_0",

)

filtered_validated_results = uc.execute_misc_engine(

input_file=validated_results_from_all_engines,

engine="filter_csv_1_0_0",

)

result_files.append(filtered_validated_results)

results_all_files = uc.execute_misc_engine(

input_file=result_files,

engine="merge_csvs_1_0_0",

)

if __name__ == "__main__":

if len(sys.argv) < 3:

print(main.__doc__)

sys.exit(1)

main(

folder=sys.argv[1],

profile=sys.argv[2],

target_decoy_database=sys.argv[3],

)

Large scale data analysis¶

-

barth_et_al_large_scale.main(folder)¶ Example script for reproducing the data for figure 3

usage:

./barth_et_al_large_scale.py <folder>The folder determines the target folder where the files will be downloaded

Chlamydomonas reinhardtii samples

Three biological replicates of 4 conditions (2_3, 2_4, 3_1, 4_1)

For more details on the samples please refer to Barth, J.; Bergner, S. V.; Jaeger, D.; Niehues, A.; Schulze, S.; Scholz, M.; Fufezan, C. The interplay of light and oxygen in the reactive oxygen stress response of Chlamydomonas reinhardtii dissected by quantitative mass spectrometry. MCP 2014, 13 (4), 969–989.

Merge all search results (per biological replicate and condition, on folder level) on engine level and validate via percolator.

‘LTQ XL high res’:

- repetition 1

- repetition 2

‘LTQ XL low res’:

- repetition 3

Database:

- Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta

Note

The database and the files will be automatically downloaded from our webpage and peptideatlas

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import glob

import os.path

import sys

def main(folder):

"""

Example script for reproducing the data for figure 3

usage:

./barth_et_al_large_scale.py <folder>

The folder determines the target folder where the files will be downloaded

Chlamydomonas reinhardtii samples

Three biological replicates of 4 conditions (2_3, 2_4, 3_1, 4_1)

For more details on the samples please refer to

Barth, J.; Bergner, S. V.; Jaeger, D.; Niehues, A.; Schulze, S.; Scholz,

M.; Fufezan, C. The interplay of light and oxygen in the reactive oxygen

stress response of Chlamydomonas reinhardtii dissected by quantitative mass

spectrometry. MCP 2014, 13 (4), 969–989.

Merge all search results (per biological replicate and condition, on folder

level) on engine level and validate via percolator.

'LTQ XL high res':

* repetition 1

* repetition 2

'LTQ XL low res':

* repetition 3

Database:

* Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta

Note:

The database and the files will be automatically downloaded from our

webpage and peptideatlas

"""

input_params = {

"database": os.path.join(

os.pardir,

"example_data",

"Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

),

"modifications": [

"M,opt,any,Oxidation",

"*,opt,Prot-N-term,Acetyl", # N-Acetylation

],

"ftp_url": "ftp.peptideatlas.org",

"ftp_login": "PASS00269",

"ftp_password": "FI4645a",

"ftp_output_folder_root": folder,

"http_url": "https://www.sas.upenn.edu/~sschulze/Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

"http_output_folder": os.path.join(os.pardir, "example_data"),

}

uc = ursgal.UController(params=input_params)

if os.path.exists(input_params["database"]) is False:

uc.fetch_file(engine="get_http_files_1_0_0")

output_folder_to_file_list = {

("rep1_sample_2_3", "LTQ XL high res"): [

"CF_07062012_pH8_2_3A.mzML",

"CF_13062012_pH3_2_3A.mzML",

"CF_13062012_pH4_2_3A.mzML",

"CF_13062012_pH5_2_3A.mzML",

"CF_13062012_pH6_2_3A.mzML",

"CF_13062012_pH11FT_2_3A.mzML",

],

("rep1_sample_2_4", "LTQ XL high res"): [

"CF_07062012_pH8_2_4A.mzML",

"CF_13062012_pH3_2_4A_120615113039.mzML",

"CF_13062012_pH4_2_4A.mzML",

"CF_13062012_pH5_2_4A.mzML",

"CF_13062012_pH6_2_4A.mzML",

"CF_13062012_pH11FT_2_4A.mzML",

],

("rep1_sample_3_1", "LTQ XL high res"): [

"CF_12062012_pH8_1_3A.mzML",

"CF_13062012_pH3_1_3A.mzML",

"CF_13062012_pH4_1_3A.mzML",

"CF_13062012_pH5_1_3A.mzML",

"CF_13062012_pH6_1_3A.mzML",

"CF_13062012_pH11FT_1_3A.mzML",

],

("rep1_sample_4_1", "LTQ XL high res"): [

"CF_07062012_pH8_1_4A.mzML",

"CF_13062012_pH3_1_4A.mzML",

"CF_13062012_pH4_1_4A.mzML",

"CF_13062012_pH5_1_4A.mzML",

"CF_13062012_pH6_1_4A.mzML",

"CF_13062012_pH11FT_1_4A.mzML",

],

("rep2_sample_2_3", "LTQ XL high res"): [

"JB_18072012_2-3_A_FT.mzML",

"JB_18072012_2-3_A_pH3.mzML",

"JB_18072012_2-3_A_pH4.mzML",

"JB_18072012_2-3_A_pH5.mzML",

"JB_18072012_2-3_A_pH6.mzML",

"JB_18072012_2-3_A_pH8.mzML",

],

("rep2_sample_2_4", "LTQ XL high res"): [

"JB_18072012_2-4_A_FT.mzML",

"JB_18072012_2-4_A_pH3.mzML",

"JB_18072012_2-4_A_pH4.mzML",

"JB_18072012_2-4_A_pH5.mzML",

"JB_18072012_2-4_A_pH6.mzML",

"JB_18072012_2-4_A_pH8.mzML",

],

("rep2_sample_3_1", "LTQ XL high res"): [

"JB_18072012_3-1_A_FT.mzML",

"JB_18072012_3-1_A_pH3.mzML",

"JB_18072012_3-1_A_pH4.mzML",

"JB_18072012_3-1_A_pH5.mzML",

"JB_18072012_3-1_A_pH6.mzML",

"JB_18072012_3-1_A_pH8.mzML",

],

("rep2_sample_4_1", "LTQ XL high res"): [

"JB_18072012_4-1_A_FT.mzML",

"JB_18072012_4-1_A_pH3.mzML",

"JB_18072012_4-1_A_pH4.mzML",

"JB_18072012_4-1_A_pH5.mzML",

"JB_18072012_4-1_A_pH6.mzML",

"JB_18072012_4-1_A_pH8.mzML",

],

("rep3_sample_2_3", "LTQ XL low res"): [

"JB_FASP_pH3_2-3_28122012.mzML",

"JB_FASP_pH4_2-3_28122012.mzML",

"JB_FASP_pH5_2-3_28122012.mzML",

"JB_FASP_pH6_2-3_28122012.mzML",

"JB_FASP_pH8_2-3_28122012.mzML",

"JB_FASP_pH11-FT_2-3_28122012.mzML",

],

("rep3_sample_2_4", "LTQ XL low res"): [

"JB_FASP_pH3_2-4_28122012.mzML",

"JB_FASP_pH4_2-4_28122012.mzML",

"JB_FASP_pH5_2-4_28122012.mzML",

"JB_FASP_pH6_2-4_28122012.mzML",

"JB_FASP_pH8_2-4_28122012.mzML",

"JB_FASP_pH11-FT_2-4_28122012.mzML",

],

("rep3_sample_3_1", "LTQ XL low res"): [

"JB_FASP_pH3_3-1_28122012.mzML",

"JB_FASP_pH4_3-1_28122012.mzML",

"JB_FASP_pH5_3-1_28122012.mzML",

"JB_FASP_pH6_3-1_28122012.mzML",

"JB_FASP_pH8_3-1_28122012.mzML",

"JB_FASP_pH11-FT_3-1_28122012.mzML",

],

("rep3_sample_4_1", "LTQ XL low res"): [

"JB_FASP_pH3_4-1_28122012.mzML",

"JB_FASP_pH4_4-1_28122012.mzML",

"JB_FASP_pH5_4-1_28122012.mzML",

"JB_FASP_pH6_4-1_28122012.mzML",

"JB_FASP_pH8_4-1_28122012.mzML",

"JB_FASP_pH11-FT_4-1_28122012_130121201449.mzML",

],

}

for (outfolder, profile), mzML_file_list in sorted(

output_folder_to_file_list.items()

):

uc.params["ftp_output_folder"] = os.path.join(

input_params["ftp_output_folder_root"], outfolder

)

uc.params["ftp_include_ext"] = mzML_file_list

if os.path.exists(uc.params["ftp_output_folder"]) is False:

os.makedirs(uc.params["ftp_output_folder"])

uc.fetch_file(engine="get_ftp_files_1_0_0")

if os.path.exists(input_params["database"]) is False:

uc.fetch_file(engine="get_http_files_1_0_0")

search_engines = [

"omssa_2_1_9",

"xtandem_piledriver",

"myrimatch_2_1_138",

"msgfplus_v9979",

"msamanda_1_0_0_5243",

]

# This dict will be populated with the percolator-validated results

# of each engine ( 3 replicates x4 conditions = 12 files each )

percolator_results = {

"omssa_2_1_9": [],

"xtandem_piledriver": [],

"msgfplus_v9979": [],

"myrimatch_2_1_138": [],

"msamanda_1_0_0_5243": [],

}

five_files_for_venn_diagram = []

for search_engine in search_engines:

# This list will collect all 12 result files for each engine,

# after Percolator validation and filtering for PSMs with a

# FDR <= 0.01

filtered_results_of_engine = []

for mzML_dir_ext, mass_spectrometer in output_folder_to_file_list.keys():

# for mass_spectrometer, replicate_dir in replicates:

# for condition_dir in conditions:

uc.set_profile(mass_spectrometer)

mzML_dir = os.path.join(

input_params["ftp_output_folder_root"], mzML_dir_ext

)

# i.e. /media/plan-f/mzML/Christian_Fufezan/ROS_Experiment_2012/Juni_2012/2_3/Tech_A/

# all files ending with .mzml in that directory will be used!

unified_results_list = []

for filename in glob.glob(os.path.join(mzML_dir, "*.mzML")):

# print(filename)

if filename.lower().endswith(".mzml"):

# print(filename)

unified_search_results = uc.search(

input_file=filename,

engine=search_engine,

)

unified_results_list.append(unified_search_results)

# Merging results from the 6 pH-fractions:

merged_unified = uc.execute_misc_engine(

input_file=unified_results_list,

engine="merge_csvs_1_0_0",

)

# Validation with Percolator:

percolator_validated = uc.validate(

input_file=merged_unified,

engine="percolator_2_08", # one could replace this with 'qvality'

)

percolator_results[search_engine].append(percolator_validated)

# At this point, the analysis is finished. We got

# Percolator-validated results for each of the 3

# replicates and 12 conditions.

# But let's see how well the five search engines

# performed! To compare, we collect all PSMs with

# an estimated FDR <= 0.01 for each engine, and

# plot this information with the VennDiagram UNode.

# We will also use the Combine FDR Score method

# to combine the results from all five engines,

# and increase the number of identified peptides.

five_large_merged = []

filtered_final_results = []

# We will estimate the FDR for all 60 files

# (5 engines x12 files) when using percolator PEPs as

# quality score

uc.params["validation_score_field"] = "PEP"

uc.params["bigger_scores_better"] = False

# To make obtain smaller CSV files (and make plotting

# less RAM-intensive, we remove all decoys and PSMs above

# 0.06 FDR

uc.params["csv_filter_rules"] = [

["estimated_FDR", "lte", 0.06],

["Is decoy", "equals", "false"],

]

for engine, percolator_validated_list in percolator_results.items():

# unfiltered files for cFDR script

twelve_merged = uc.execute_misc_engine(

input_file=percolator_validated_list,

engine="merge_csvs_1_0_0",

)

twelve_filtered = []

for one_of_12 in percolator_validated_list:

one_of_12_FDR = uc.validate(

input_file=one_of_12, engine="add_estimated_fdr_1_0_0"

)

one_of_12_FDR_filtered = uc.execute_misc_engine(

input_file=one_of_12_FDR, engine="filter_csv_1_0_0"

)

twelve_filtered.append(one_of_12_FDR_filtered)

# For the combined FDR scoring, we merge all 12 files:

filtered_merged = uc.execute_misc_engine(

input_file=twelve_filtered, engine="merge_csvs_1_0_0"

)

five_large_merged.append(twelve_merged)

filtered_final_results.append(filtered_merged)

# The five big merged files of each engine are combined:

cFDR = uc.combine_search_results(

input_files=five_large_merged,

engine="combine_FDR_0_1",

)

# We estimate the FDR of this combined approach:

uc.params["validation_score_field"] = "Combined FDR Score"

uc.params["bigger_scores_better"] = False

cFDR_FDR = uc.validate(input_file=cFDR, engine="add_estimated_fdr_1_0_0")

# Removing decoys and low quality hits, to obtain a

# smaller file:

uc.params["csv_filter_rules"] = [

["estimated_FDR", "lte", 0.06],

["Is decoy", "equals", "false"],

]

cFDR_filtered_results = uc.execute_misc_engine(

input_file=cFDR_FDR,

engine="filter_csv_1_0_0",

)

filtered_final_results.append(cFDR_filtered_results)

# Since we produced quite a lot of files, let's print the full

# paths to our most important result files so we find them quickly:

print(

"""

These files can now be easily parsed and plotted with your

plotting tool of choice! We used the Python plotting library

matplotlib. Each unique combination of Sequence, modification

and charge was counted as a unique peptide.

"""

)

print("\n########### Result files: ##############")

for result_file in filtered_final_results:

print("\t*{0}".format(result_file))

if __name__ == "__main__":

if len(sys.argv) < 2:

print(main.__doc__)

sys.exit(1)

main(sys.argv[1])

Complete workflow for human BR dataset analysis¶

-

human_br_complete_workflow.main(folder)¶ usage:

./human_br_complete_workflow.py <folder_with_human_br_files>This scripts produces the data for figure 3.

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

import sys

import pprint

def main(folder):

"""

usage:

./human_br_complete_workflow.py <folder_with_human_br_files>

This scripts produces the data for figure 3.

"""

# Initialize the UController:

uc = ursgal.UController(

params={

"enzyme": "trypsin",

"decoy_generation_mode": "reverse_protein",

}

)

# MS Spectra, downloaded from http://proteomecentral.proteomexchange.org

# via the dataset accession PXD000263 and converted to mzML

mass_spec_files = [

"120813OTc1_NQL-AU-0314-LFQ-LCM-SG-01_013.mzML",

"120813OTc1_NQL-AU-0314-LFQ-LCM-SG-02_025.mzML",

"120813OTc1_NQL-AU-0314-LFQ-LCM-SG-03_033.mzML",

"120813OTc1_NQL-AU-0314-LFQ-LCM-SG-04_048.mzML",

]

for mass_spec_file in mass_spec_files:

if os.path.exists(os.path.join(folder, mass_spec_file)) is False:

print(

"Please download RAW files to folder {} and convert to mzML:".format(

folder

)

)

pprint.pprint(mass_spec_files)

sys.exit(1)

# mods from Wen et al. (2015):

modifications = [

# Carbamidomethyl (C) was set as fixed modification

"C,fix,any,Carbamidomethyl",

"M,opt,any,Oxidation", # Oxidation (M) as well as

# Deamidated (NQ) were set as optional modification

"N,opt,any,Deamidated",

# Deamidated (NQ) were set as optional modification

"Q,opt,any,Deamidated",

]

# The target peptide database which will be searched (UniProt Human

# reference proteome from July 2013)

target_database = "uniprot_human_UP000005640_created_until_20130707.fasta"

# Let's turn it into a target decoy database by reversing peptides:

target_decoy_database = uc.execute_misc_engine(

input_file=target_database, engine="generate_target_decoy_1_0_0"

)

# OMSSA parameters from Wen et al. (2015):

omssa_params = {

# (used by default) # -w

"he": "1000", # -he 1000

"zcc": "1", # -zcc 1

"frag_mass_tolerance": "0.6", # -to 0.6

"frag_mass_tolerance_unit": "da", # -to 0.6

"precursor_mass_tolerance_minus": "10", # -te 10

"precursor_mass_tolerance_plus": "10", # -te 10

"precursor_mass_tolerance_unit": "ppm", # -teppm

"score_a_ions": False, # -i 1,4

"score_b_ions": True, # -i 1,4

"score_c_ions": False, # -i 1,4

"score_x_ions": False, # -i 1,4

"score_y_ions": True, # -i 1,4

"score_z_ions": False, # -i 1,4

"enzyme": "trypsin_p", # -e 10

"maximum_missed_cleavages": "1", # -v 1

"precursor_max_charge": "8", # -zh 8

"precursor_min_charge": "1", # -zl 1

"tez": "1", # -tez 1

"precursor_isotope_range": "0,1", # -ti 1

"num_match_spec": "1", # -hc 1

"database": target_decoy_database,

"modifications": modifications,

}

# MS-GF+ parameters from Wen et al. (2015):

msgf_params = {

# precursor ion mass tolerance was set to 10 ppm

"precursor_mass_tolerance_unit": "ppm",

# precursor ion mass tolerance was set to 10 ppm

"precursor_mass_tolerance_minus": "10",

# precursor ion mass tolerance was set to 10 ppm

"precursor_mass_tolerance_plus": "10",

# the max number of optional modifications per peptide were set as 3

# (used by default) # number of allowed isotope errors was set as 1

"enzyme": "trypsin", # the enzyme was set as trypsin

# (used by default) # fully enzymatic peptides were specified, i.e. no non-enzymatic termini

"frag_method": "1", # the fragmentation method selected in the search was CID

"max_pep_length": "45", # the maximum peptide length to consider was set as 45

# the minimum precursor charge to consider if charges are not specified

# in the spectrum file was set as 1

"precursor_min_charge": "1",

# the maximum precursor charge to consider was set as 8

"precursor_max_charge": "8",

# (used by default) # the parameter 'addFeatures' was set as 1 (required for Percolator)

# all of the other parameters were set as default

# the instrument selected

# was High-res

"database": target_decoy_database,

"modifications": modifications,

}

# X!Tandem parameters from Wen et al. (2015):

xtandem_params = {

# precursor ion mass tolerance was set to 10 ppm

"precursor_mass_tolerance_unit": "ppm",

# precursor ion mass tolerance was set to 10 ppm

"precursor_mass_tolerance_minus": "10",

# precursor ion mass tolerance was set to 10 ppm

"precursor_mass_tolerance_plus": "10",

# the fragment ion mass tolerance was set to 0.6 Da

"frag_mass_tolerance": "0.6",

# the fragment ion mass tolerance was set to 0.6 Da

"frag_mass_tolerance_unit": "da",

# parent monoisotopic mass isotope error was set as 'yes'

"precursor_isotope_range": "0,1",

"precursor_max_charge": "8", # maximum parent charge of spectrum was set as 8

"enzyme": "trypsin", # the enzyme was set as trypsin ([RK]|[X])

# the maximum missed cleavage sites were set as 1

"maximum_missed_cleavages": "1",

# (used by default) # no model refinement was employed.

"database": target_decoy_database,

"modifications": modifications,

}

search_engine_settings = [

# not used in Wen et al., so we use the same settings as xtandem

("msamanda_1_0_0_5243", xtandem_params, "LTQ XL high res"),

# not used in Wen et al., so we use the same settings as xtandem

("myrimatch_2_1_138", xtandem_params, "LTQ XL high res"),

# the instrument selected was High-res

("msgfplus_v9979", msgf_params, "LTQ XL high res"),

("xtandem_jackhammer", xtandem_params, None),

("omssa_2_1_9", omssa_params, None),

]

merged_validated_files_3_engines = []

merged_validated_files_5_engines = []

for engine, wen_params, instrument in search_engine_settings:

# Initializing the uPLANIT UController class with

# our specified modifications and mass spectrometer

uc = ursgal.UController(params=wen_params)

if instrument is not None:

uc.set_profile(instrument)

unified_results = []

percolator_validated_results = []

for mzML_file in mass_spec_files:

unified_search_results = uc.search(

input_file=mzML_file,

engine=engine,

)

unified_results.append(unified_search_results)

validated_csv = uc.validate(

input_file=unified_search_results,

engine="percolator_2_08",

)

percolator_validated_results.append(validated_csv)

merged_validated_csv = uc.execute_misc_engine(

input_file=percolator_validated_results, engine="merge_csvs_1_0_0"

)

merged_unvalidated_csv = uc.execute_misc_engine(

input_file=unified_results,

engine="merge_csvs_1_0_0",

)

if engine in ["omssa_2_1_9", "xtandem_jackhammer", "msgfplus_v9979"]:

merged_validated_files_3_engines.append(merged_validated_csv)

merged_validated_files_5_engines.append(merged_validated_csv)

uc.params["prefix"] = "5-engines-summary"

uc.combine_search_results(

input_files=merged_validated_files_5_engines,

engine="combine_FDR_0_1",

)

uc.params["prefix"] = "3-engines-summary"

uc.combine_search_results(

input_files=merged_validated_files_3_engines,

engine="combine_FDR_0_1",

)

if __name__ == "__main__":

if len(sys.argv) < 2:

print(main.__doc__)

sys.exit(1)

main(sys.argv[1])

Complete workflow for analysis with pGlyco¶

-

example_pglyco_workflow.main(input_raw_file=None, database=None, enzyme=None)¶ - This script executes three steps:

- convert .raw file using pParse

- search the resulting .mgf file using pGlyco

- validate results using pGlycoFDR

Since pGlyco does not support providing a custom target-decoy database, a database including only target sequences must be provided. In this database, the N-glycosylation motif N-X-S/T needs to be replaced with J-X-S/T, which will be done by the pGlyco wrapper.

- usage:

- ./simple_example_pglyco_workflow.py <input_raw_file> <database> <enzyme>

#!/usr/bin/env python

# encoding: utf-8

import ursgal

import os

import sys

import shutil

def main(input_raw_file=None, database=None, enzyme=None):

"""

This script executes three steps:

- convert .raw file using pParse

- search the resulting .mgf file using pGlyco

- validate results using pGlycoFDR

Since pGlyco does not support providing a custom target-decoy database,

a database including only target sequences must be provided.

In this database, the N-glycosylation motif N-X-S/T needs to be replaced

with J-X-S/T, which will be done by the pGlyco wrapper.

usage:

./simple_example_pglyco_workflow.py <input_raw_file> <database> <enzyme>

"""

uc = ursgal.UController(

profile="QExactive+",

params={

"database": database,

"modifications": [

"M,opt,any,Oxidation", # Met oxidation

"C,fix,any,Carbamidomethyl", # Carbamidomethylation

"*,opt,Prot-N-term,Acetyl", # N-Acteylation

],

"peptide_mapper_class_version": "UPeptideMapper_v4",

"enzyme": enzyme,

"frag_mass_tolerance": 20,

"frag_mass_tolerance_unit": "ppm",

"precursor_mass_tolerance_plus": 5,

"precursor_mass_tolerance_minus": 5,

"aa_exception_dict": {},

"csv_filter_rules": [

["Is decoy", "equals", "false"],

["q-value", "lte", 0.01],

["Conflicting uparam", "contains_not", "enzyme"],

],

},

)

xtracted_file = uc.convert(

input_file=input_raw_file,

engine="pparse_2_2_1",

# force = True,

)

mzml_file = uc.convert(

input_file=input_raw_file,

engine="thermo_raw_file_parser_1_1_2",

)

# os.remove(mzml_file.replace('.mzML', '.mgf'))

# os.remove(mzml_file.replace('.mzML', '.mgf.u.json'))

mgf_file = uc.convert(

input_file=mzml_file,

engine="mzml2mgf_2_0_0",

force=True,

)

search_result = uc.search_mgf(

input_file=xtracted_file,

engine="pglyco_db_2_2_2",

)

mapped_results = uc.execute_misc_engine(

input_file=search_result,

engine="upeptide_mapper",

)

unified_search_results = uc.execute_misc_engine(

input_file=mapped_results, engine="unify_csv"

)

validated_file = uc.validate(

input_file=search_result,

engine="pglyco_fdr_2_2_0",

)

mapped_validated_results = uc.execute_misc_engine(

input_file=validated_file,

engine="upeptide_mapper",

)

unified_validated_results = uc.execute_misc_engine(

input_file=mapped_validated_results, engine="unify_csv"

)

filtered_validated_results = uc.execute_misc_engine(

input_file=unified_validated_results,

engine="filter_csv",

)

return

if __name__ == "__main__":

main(input_raw_file=sys.argv[1], database=sys.argv[2], enzyme=sys.argv[3])

Complete workflow for analysis with TagGraph¶

-

example_pglyco_workflow.main(input_raw_file=None, database=None, enzyme=None) - This script executes three steps:

- convert .raw file using pParse

- search the resulting .mgf file using pGlyco

- validate results using pGlycoFDR

Since pGlyco does not support providing a custom target-decoy database, a database including only target sequences must be provided. In this database, the N-glycosylation motif N-X-S/T needs to be replaced with J-X-S/T, which will be done by the pGlyco wrapper.

- usage:

- ./simple_example_pglyco_workflow.py <input_raw_file> <database> <enzyme>

#!/usr/bin/env python

# encoding: utf-8

import ursgal

import os

import sys

import shutil

def main(input_raw_file=None, database=None, enzyme=None):

"""

This script executes three steps:

- convert .raw file using pParse

- search the resulting .mgf file using pGlyco

- validate results using pGlycoFDR

Since pGlyco does not support providing a custom target-decoy database,

a database including only target sequences must be provided.

In this database, the N-glycosylation motif N-X-S/T needs to be replaced

with J-X-S/T, which will be done by the pGlyco wrapper.

usage:

./simple_example_pglyco_workflow.py <input_raw_file> <database> <enzyme>

"""

uc = ursgal.UController(

profile="QExactive+",

params={

"database": database,

"modifications": [

"M,opt,any,Oxidation", # Met oxidation

"C,fix,any,Carbamidomethyl", # Carbamidomethylation

"*,opt,Prot-N-term,Acetyl", # N-Acteylation

],

"peptide_mapper_class_version": "UPeptideMapper_v4",

"enzyme": enzyme,

"frag_mass_tolerance": 20,

"frag_mass_tolerance_unit": "ppm",

"precursor_mass_tolerance_plus": 5,

"precursor_mass_tolerance_minus": 5,

"aa_exception_dict": {},

"csv_filter_rules": [

["Is decoy", "equals", "false"],

["q-value", "lte", 0.01],

["Conflicting uparam", "contains_not", "enzyme"],

],

},

)

xtracted_file = uc.convert(

input_file=input_raw_file,

engine="pparse_2_2_1",

# force = True,

)

mzml_file = uc.convert(

input_file=input_raw_file,

engine="thermo_raw_file_parser_1_1_2",

)

# os.remove(mzml_file.replace('.mzML', '.mgf'))

# os.remove(mzml_file.replace('.mzML', '.mgf.u.json'))

mgf_file = uc.convert(

input_file=mzml_file,

engine="mzml2mgf_2_0_0",

force=True,

)

search_result = uc.search_mgf(

input_file=xtracted_file,

engine="pglyco_db_2_2_2",

)

mapped_results = uc.execute_misc_engine(

input_file=search_result,

engine="upeptide_mapper",

)

unified_search_results = uc.execute_misc_engine(

input_file=mapped_results, engine="unify_csv"

)

validated_file = uc.validate(

input_file=search_result,

engine="pglyco_fdr_2_2_0",

)

mapped_validated_results = uc.execute_misc_engine(

input_file=validated_file,

engine="upeptide_mapper",

)

unified_validated_results = uc.execute_misc_engine(

input_file=mapped_validated_results, engine="unify_csv"

)

filtered_validated_results = uc.execute_misc_engine(

input_file=unified_validated_results,

engine="filter_csv",

)

return

if __name__ == "__main__":

main(input_raw_file=sys.argv[1], database=sys.argv[2], enzyme=sys.argv[3])

Example search for 15N labeling and no label¶

-

search_with_label_15N.main()¶ Executes a search with 3 different search engines on an example file from the data from Barth et al. Two searches are performed pe engine for each 14N (unlabeled) and 15N labeling. The overlap of identified peptides for the 14N and 15N searches between the engines is visualized as well as the overlap between all 14N and 15N identified peptides.

- usage:

- ./search_with_label_15N.py

Note

It is important to convert the mgf file outside of (and before :) ) the search loop to avoid mgf file redundancy and to assure correct retention time mapping in the unify_csv node.

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

def main():

"""

Executes a search with 3 different search engines on an example file from

the data from Barth et al. Two searches are performed pe engine for each

14N (unlabeled) and 15N labeling. The overlap of identified peptides

for the 14N and 15N searches between the engines is visualized as well as

the overlap between all 14N and 15N identified peptides.

usage:

./search_with_label_15N.py

Note:

It is important to convert the mgf file outside of (and before :) ) the

search loop to avoid mgf file redundancy and to assure correct retention

time mapping in the unify_csv node.

"""

engine_list = [

"omssa_2_1_9",

"xtandem_piledriver",

"msgfplus_v9979",

]

params = {

"database": os.path.join(

os.pardir,

"example_data",

"Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

),

"modifications": [],

"csv_filter_rules": [["PEP", "lte", 0.01], ["Is decoy", "equals", "false"]],

"ftp_url": "ftp.peptideatlas.org",

"ftp_login": "PASS00269",

"ftp_password": "FI4645a",

"ftp_include_ext": [

"JB_FASP_pH8_2-3_28122012.mzML",

],

"ftp_output_folder": os.path.join(

os.pardir, "example_data", "search_with_label_15N"

),

"http_url": "https://www.sas.upenn.edu/~sschulze/Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

"http_output_folder": os.path.join(os.pardir, "example_data"),

}

if os.path.exists(params["ftp_output_folder"]) is False:

os.mkdir(params["ftp_output_folder"])

uc = ursgal.UController(profile="LTQ XL low res", params=params)

mzML_file = os.path.join(params["ftp_output_folder"], params["ftp_include_ext"][0])

if os.path.exists(mzML_file) is False:

uc.fetch_file(engine="get_ftp_files_1_0_0")

if os.path.exists(params["database"]) is False:

uc.fetch_file(engine="get_http_files_1_0_0")

mgf_file = uc.convert(

input_file=mzML_file,

engine="mzml2mgf_1_0_0",

)

files_2_merge = {}

label_list = ["14N", "15N"]

for label in label_list:

validated_and_filtered_files_list = []

uc.params["label"] = label

uc.params["prefix"] = label

for engine in engine_list:

search_result = uc.search_mgf(

input_file=mgf_file,

engine=engine,

)

converted_result = uc.convert(

input_file=search_result,

guess_engine=True,

)

mapped_results = uc.execute_misc_engine(

input_file=converted_result,

engine="upeptide_mapper",

)

unified_search_results = uc.execute_misc_engine(

input_file=mapped_results, engine="unify_csv"

)

validated_file = uc.validate(

input_file=unified_search_results,

engine="percolator_2_08",

)

filtered_file = uc.execute_misc_engine(

input_file=validated_file, engine="filter_csv"

)

validated_and_filtered_files_list.append(filtered_file)

files_2_merge[label] = validated_and_filtered_files_list

uc.visualize(

input_files=validated_and_filtered_files_list,

engine="venndiagram_1_1_0",

)

uc.params["prefix"] = None

uc.params["label"] = ""

uc.params["visualization_label_positions"] = {}

label_comparison_file_list = []

for n, label in enumerate(label_list):

uc.params["visualization_label_positions"][str(n)] = label

label_comparison_file_list.append(

uc.execute_misc_engine(input_file=files_2_merge[label], engine="merge_csvs")

)

uc.visualize(

input_files=label_comparison_file_list,

engine="venndiagram_1_1_0",

)

return

if __name__ == "__main__":

main()

Open Modification Search Scripts¶

Open modification search with three engines and combined PEP¶

-

open_modification_search_incl_combined_pep.main(folder=None, database=None, enzyme=None)¶ Example workflow to perform a open modification search with three independent search engines across all mzML files of a given folder and to statistically post-process and combine the results of all searches.

- Usage:

- ./open_modification_search_incl_combined_pep.py <mzML_folder> <database> <enzyme>

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import sys

import glob

import os

import pprint

from collections import defaultdict

def main(folder=None, database=None, enzyme=None):

"""

Example workflow to perform a open modification search with three independent search engines

across all mzML files of a given folder and to statistically post-process and combine the

results of all searches.

Usage:

./open_modification_search_incl_combined_pep.py <mzML_folder> <database> <enzyme>

"""

# For this particular dataset, two enzymes were used, namely gluc and trypsin.

mzml_files = []

for mzml in glob.glob(os.path.join(folder, "*.mzML")):

mzml_files.append(mzml)

mass_spectrometer = "QExactive+"

validation_engine = "percolator_3_4_0"

search_engines = ["msfragger_2_3", "pipi_1_4_6", "moda_v1_61"]

params = {

"modifications": ["C,fix,any,Carbamidomethyl"],

"csv_filter_rules": [

["Is decoy", "equals", "false"],

["PEP", "lte", 0.01],

],

"frag_mass_tolerance_unit": "ppm",

"frag_mass_tolerance": 20,

"precursor_mass_tolerance_unit": "ppm",

"precursor_mass_tolerance_plus": 5,

"precursor_mass_tolerance_minus": 5,

"moda_high_res": False,

"max_mod_size": 4000,

"min_mod_size": -200,

"precursor_true_units": "ppm",

"precursor_true_tolerance": 5,

"percolator_post_processing": "mix-max",

"psm_defining_colnames": [

"Spectrum Title",

"Sequence",

"Modifications",

"Charge",

"Is decoy",

"Mass Difference",

],

"database": database,

"enzyme": enzyme,

}

uc = ursgal.UController(

profile=mass_spectrometer,

params=params,

)

# This will hold input to combined PEP engine

combined_pep_input = defaultdict(list)

# This dictionary will help organize which results to merge

all_merged_results = defaultdict(list)

for search_engine in search_engines:

# The modification size for MSFragger is configured through precursor mass tolerance

if search_engine == "msfragger_2_3":

uc.params.update(

{

"precursor_mass_tolerance_unit": "da",

"precursor_mass_tolerance_plus": 4000,

"precursor_mass_tolerance_minus": 200,

}

)

for n, spec_file in enumerate(mzml_files):

# 1. convert to MGF

mgf_file = uc.convert(

input_file=spec_file,

engine="mzml2mgf_2_0_0",

)

# 2. do the actual search

raw_search_results = uc.search_mgf(

input_file=mgf_file,

engine=search_engine,

)

# reset precursor mass tolerance just in case it was previously changed

uc.params.update(

{

"precursor_mass_tolerance_unit": "ppm",

"precursor_mass_tolerance_plus": 5,

"precursor_mass_tolerance_minus": 5,

}

)

# 3. convert files to csv

csv_search_results = uc.convert(

input_file=raw_search_results,

engine=None,

guess_engine=True,

)

# 4. protein mapping.

mapped_csv_search_results = uc.execute_misc_engine(

input_file=csv_search_results,

engine="upeptide_mapper_1_0_0",

)

# 5. Convert csv to unified ursgal csv format:

unified_search_results = uc.execute_misc_engine(

input_file=mapped_csv_search_results,

engine="unify_csv_1_0_0",

merge_duplicates=False,

)

# 6. Validate the results

validated_csv = uc.validate(

input_file=unified_search_results,

engine=validation_engine,

)

# save the validated input for combined pep

# Eventually, each sample will have 3 files correpsonding to the 3 search engines

combined_pep_input["sample_{0}".format(n)].append(validated_csv)

filtered_validated_results = uc.execute_misc_engine(

input_file=validated_csv,

engine="filter_csv_1_0_0",

merge_duplicates=False,

)

all_merged_results["percolator_only"].append(filtered_validated_results)

# combined pep

uc.params.update(

{

"csv_filter_rules": [

["Is decoy", "equals", "false"],

["combined PEP", "lte", 0.01],

],

"psm_defining_colnames": [

"Spectrum Title",

"Sequence",

"Modifications",

"Charge",

"Is decoy",

],

}

)

for sample in combined_pep_input.keys():

combine_results = uc.execute_misc_engine(

input_file=combined_pep_input[sample],

engine="combine_pep_1_0_0",

)

filtered_validated_results = uc.execute_misc_engine(

input_file=combine_results,

engine="filter_csv_1_0_0",

)

all_merged_results["combined_pep"].append(filtered_validated_results)

# separately merge results from the two types of validation techniques

# We also add back "Mass Difference" to columns defining a PSM to avoid merging mass differences

uc.params.update(

{

"psm_defining_colnames": [

"Spectrum Title",

"Sequence",

"Modifications",

"Charge",

"Is decoy",

"Mass Difference",

],

}

)

for validation_type in all_merged_results.keys():

if validation_type == "percolator_only":

uc.params["psm_colnames_to_merge_multiple_values"] = {

"PEP": "min_value",

}

else:

uc.params["psm_colnames_to_merge_multiple_values"] = {

"combined PEP": "min_value",

"Bayes PEP": "min_value",

}

uc.params["prefix"] = "All_{0}".format(

validation_type

) # helps recognize files easily

merged_results_one_rep = uc.execute_misc_engine(

input_file=all_merged_results[validation_type],

engine="merge_csvs_1_0_0",

merge_duplicates=True,

)

uc.params["prefix"] = ""

if __name__ == "__main__":

main(

folder=sys.argv[1],

database=sys.argv[2],

enzyme=sys.argv[3],

)

Complete workflow for analysis with TagGraph¶

-

example_pglyco_workflow.main(input_raw_file=None, database=None, enzyme=None) - This script executes three steps:

- convert .raw file using pParse

- search the resulting .mgf file using pGlyco

- validate results using pGlycoFDR

Since pGlyco does not support providing a custom target-decoy database, a database including only target sequences must be provided. In this database, the N-glycosylation motif N-X-S/T needs to be replaced with J-X-S/T, which will be done by the pGlyco wrapper.

- usage:

- ./simple_example_pglyco_workflow.py <input_raw_file> <database> <enzyme>

#!/usr/bin/env python

# encoding: utf-8

import ursgal

import os

import sys

import shutil

def main(input_raw_file=None, database=None, enzyme=None):

"""

This script executes three steps:

- convert .raw file using pParse

- search the resulting .mgf file using pGlyco

- validate results using pGlycoFDR

Since pGlyco does not support providing a custom target-decoy database,

a database including only target sequences must be provided.

In this database, the N-glycosylation motif N-X-S/T needs to be replaced

with J-X-S/T, which will be done by the pGlyco wrapper.

usage:

./simple_example_pglyco_workflow.py <input_raw_file> <database> <enzyme>

"""

uc = ursgal.UController(

profile="QExactive+",

params={

"database": database,

"modifications": [

"M,opt,any,Oxidation", # Met oxidation

"C,fix,any,Carbamidomethyl", # Carbamidomethylation

"*,opt,Prot-N-term,Acetyl", # N-Acteylation

],

"peptide_mapper_class_version": "UPeptideMapper_v4",

"enzyme": enzyme,

"frag_mass_tolerance": 20,

"frag_mass_tolerance_unit": "ppm",

"precursor_mass_tolerance_plus": 5,

"precursor_mass_tolerance_minus": 5,

"aa_exception_dict": {},

"csv_filter_rules": [

["Is decoy", "equals", "false"],

["q-value", "lte", 0.01],

["Conflicting uparam", "contains_not", "enzyme"],

],

},

)

xtracted_file = uc.convert(

input_file=input_raw_file,

engine="pparse_2_2_1",

# force = True,

)

mzml_file = uc.convert(

input_file=input_raw_file,

engine="thermo_raw_file_parser_1_1_2",

)

# os.remove(mzml_file.replace('.mzML', '.mgf'))

# os.remove(mzml_file.replace('.mzML', '.mgf.u.json'))

mgf_file = uc.convert(

input_file=mzml_file,

engine="mzml2mgf_2_0_0",

force=True,

)

search_result = uc.search_mgf(

input_file=xtracted_file,

engine="pglyco_db_2_2_2",

)

mapped_results = uc.execute_misc_engine(

input_file=search_result,

engine="upeptide_mapper",

)

unified_search_results = uc.execute_misc_engine(

input_file=mapped_results, engine="unify_csv"

)

validated_file = uc.validate(

input_file=search_result,

engine="pglyco_fdr_2_2_0",

)

mapped_validated_results = uc.execute_misc_engine(

input_file=validated_file,

engine="upeptide_mapper",

)

unified_validated_results = uc.execute_misc_engine(

input_file=mapped_validated_results, engine="unify_csv"

)

filtered_validated_results = uc.execute_misc_engine(

input_file=unified_validated_results,

engine="filter_csv",

)

return

if __name__ == "__main__":

main(input_raw_file=sys.argv[1], database=sys.argv[2], enzyme=sys.argv[3])

Processing of mass differences using PTM-Shepherd¶

-

ptmshepherd_mass_difference_processing.main(folder=None, sanitized_search_results=None)¶ Downstream processing of mass differences from open modification search results using PTM-Shepherd. A folder containing all relevant mzML files and a sanitized (one PSM per spectrum) Ursgal result file (containing open modification search results) are required.

- usage:

- ./ptmshepherd_mass_difference_processing.py <folder_with_mzML> <sanitized_result_file>

#!/usr/bin/env python3

import os

import ursgal

import sys

import glob

from collections import defaultdict as ddict

import csv

def main(folder=None, sanitized_search_results=None):

"""

Downstream processing of mass differences from open modification search results

using PTM-Shepherd.

A folder containing all relevant mzML files and a sanitized (one PSM per spectrum)

Ursgal result file (containing open modification search results) are required.

usage:

./ptmshepherd_mass_difference_processing.py <folder_with_mzML> <sanitized_result_file>

"""

mzml_files = []

for mzml in glob.glob(os.path.join(folder, "*.mzML")):

mzml_files.append(mzml)

params = {

"use_pyqms_for_mz_calculation": True,

"cpus": 8,

"precursor_mass_tolerance_unit": "ppm",

"precursor_mass_tolerance_plus": 5,

"precursor_mass_tolerance_minus": 5,

"frag_mass_tolerance_unit": "ppm",

"frag_mass_tolerance": 20,

"modifications": [

"C,opt,any,Carbamidomethyl",

],

"-xmx": "12G",

"psm_defining_colnames": [

"Spectrum Title",

"Sequence",

# 'Modifications',

# 'Mass Difference',

# 'Charge',

# 'Is decoy',

],

"mzml_input_files": mzml_files,

"validation_score_field": "combined PEP",

"bigger_scores_better": False,

}

uc = ursgal.UController(params=params, profile="QExactive+", verbose=False)

ptmshepherd_results = uc.validate(

input_file=sanitized_search_results,

engine="ptmshepherd_0_3_5",

# force=True,

)

if __name__ == "__main__":

main(

folder=sys.argv[1],

sanitized_search_results=sys.argv[2],

)

Version Comparison Scripts¶

X!Tandem version comparison¶

-

xtandem_version_comparison.main()¶ Executes a search with 5 versions of X!Tandem on an example file from the data from Barth et al. 2014.

- usage:

- ./xtandem_version_comparison.py

This is a simple example file to show the straightforward comparison of different program versions of X!Tandem

Creates a Venn diagram with the peptides obtained by the different versions.

Note

At the moment in total 6 XTandem versions are incorporated in Ursgal.

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

def main():

"""

Executes a search with 5 versions of X!Tandem on an example file from the

data from Barth et al. 2014.

usage:

./xtandem_version_comparison.py

This is a simple example file to show the straightforward comparison of

different program versions of X!Tandem

Creates a Venn diagram with the peptides obtained by the different versions.

Note:

At the moment in total 6 XTandem versions are incorporated in Ursgal.

"""

engine_list = [

# 'xtandem_cyclone',

"xtandem_jackhammer",

"xtandem_sledgehammer",

"xtandem_piledriver",

"xtandem_vengeance",

"xtandem_alanine",

]

params = {

"database": os.path.join(

os.pardir,

"example_data",

"Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

),

"modifications": [],

"csv_filter_rules": [["PEP", "lte", 0.01], ["Is decoy", "equals", "false"]],

"ftp_url": "ftp.peptideatlas.org",

"ftp_login": "PASS00269",

"ftp_password": "FI4645a",

"ftp_include_ext": [

"JB_FASP_pH8_2-3_28122012.mzML",

],

"ftp_output_folder": os.path.join(

os.pardir, "example_data", "xtandem_version_comparison"

),

"http_url": "https://www.sas.upenn.edu/~sschulze/Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

"http_output_folder": os.path.join(os.pardir, "example_data"),

}

if os.path.exists(params["ftp_output_folder"]) is False:

os.mkdir(params["ftp_output_folder"])

uc = ursgal.UController(profile="LTQ XL low res", params=params)

mzML_file = os.path.join(params["ftp_output_folder"], params["ftp_include_ext"][0])

if os.path.exists(mzML_file) is False:

uc.fetch_file(engine="get_ftp_files_1_0_0")

if os.path.exists(params["database"]) is False:

uc.fetch_file(engine="get_http_files_1_0_0")

filtered_files_list = []

for engine in engine_list:

unified_result_file = uc.search(

input_file=mzML_file,

engine=engine,

)

validated_file = uc.validate(

input_file=unified_result_file,

engine="percolator_2_08",

)

filtered_file = uc.execute_misc_engine(

input_file=validated_file,

engine="filter_csv_1_0_0",

)

filtered_files_list.append(filtered_file)

uc.visualize(

input_files=filtered_files_list,

engine="venndiagram_1_1_0",

)

return

if __name__ == "__main__":

main()

MSGF+ version comparison¶

-

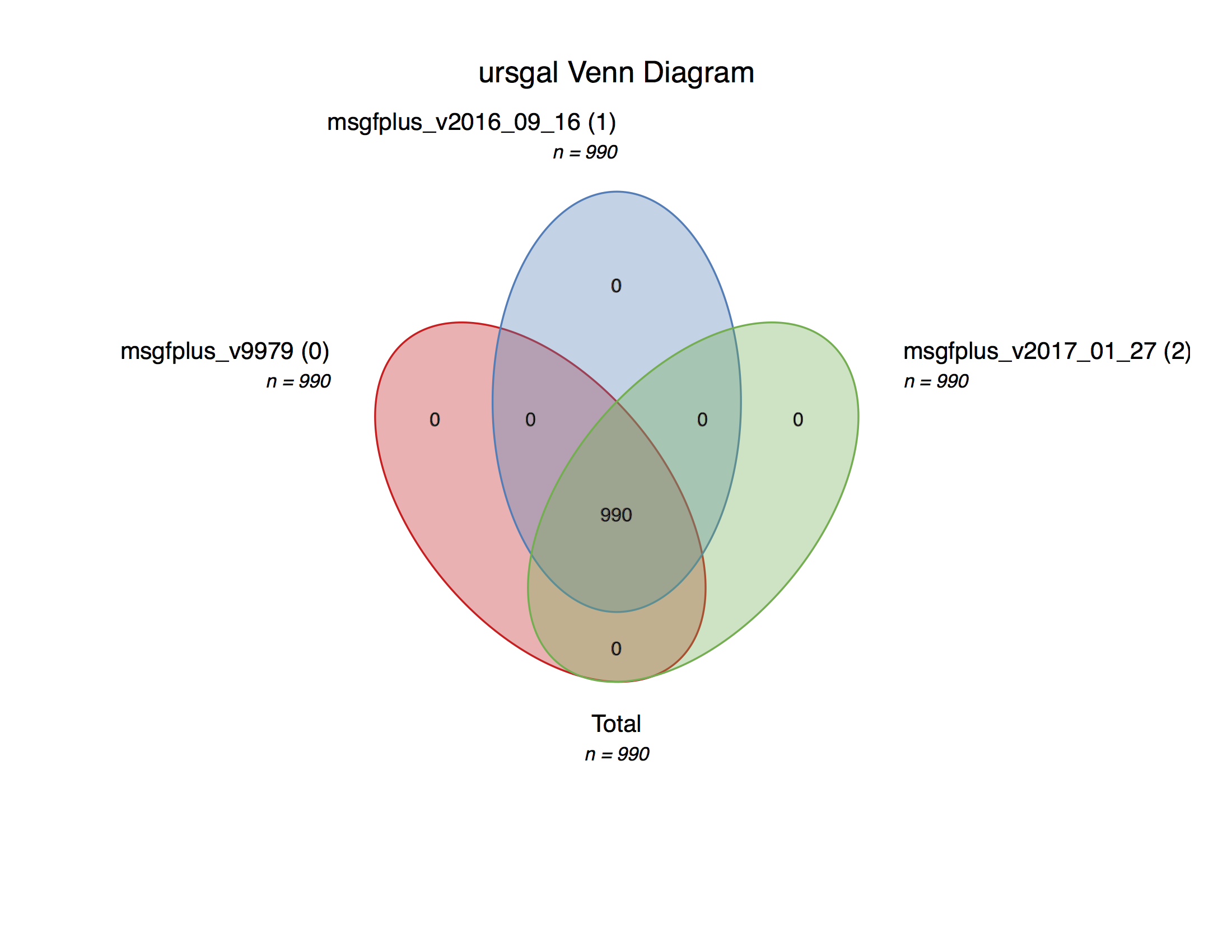

msgf_version_comparison.main()¶ Executes a search with a current maximum of 3 versions of MSGF+ on an example file from the data from Barth et al. (2014)

- usage:

- ./msgf_version_comparison.py

This is a simple example file to show the straightforward comparison of different program versions of MSGF+

Creates a Venn diagram with the peptides obtained by the different versions.

An example plot can be found in the online documentation.

Note

Uses the new MS-GF+ C# mzid converter if available

#!/usr/bin/env python3

# encoding: utf-8

import ursgal

import os

def main():

"""

Executes a search with a current maximum of 3 versions of MSGF+ on an

example file from the data from Barth et al. (2014)

usage:

./msgf_version_comparison.py

This is a simple example file to show the straightforward comparison of

different program versions of MSGF+

Creates a Venn diagram with the peptides obtained by the different

versions.

An example plot can be found in the online documentation.

Note:

Uses the new MS-GF+ C# mzid converter if available

"""

params = {

"database": os.path.join(

os.pardir,

"example_data",

"Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

),

"csv_filter_rules": [["PEP", "lte", 0.01], ["Is decoy", "equals", "false"]],

"ftp_url": "ftp.peptideatlas.org",

"ftp_login": "PASS00269",

"ftp_password": "FI4645a",

"ftp_include_ext": [

"JB_FASP_pH8_2-3_28122012.mzML",

],

"ftp_output_folder": os.path.join(

os.pardir, "example_data", "msgf_version_comparison"

),

"http_url": "https://www.sas.upenn.edu/~sschulze/Creinhardtii_281_v5_5_CP_MT_with_contaminants_target_decoy.fasta",

"http_output_folder": os.path.join(os.pardir, "example_data"),

"remove_temporary_files": False,

}

if os.path.exists(params["ftp_output_folder"]) is False:

os.mkdir(params["ftp_output_folder"])

uc = ursgal.UController(profile="LTQ XL low res", params=params)

engine_list = [

"msgfplus_v9979",

"msgfplus_v2016_09_16",

]

if "msgfplus_v2017_01_27" in uc.unodes.keys():

if uc.unodes["msgfplus_v2017_01_27"]["available"]:

engine_list.append("msgfplus_v2017_01_27")

if "msgfplus_v2018_01_30" in uc.unodes.keys():

if uc.unodes["msgfplus_v2018_01_30"]["available"]:

engine_list.append("msgfplus_v2018_01_30")

if "msgfplus_C_mzid2csv_v2017_07_04" in uc.unodes.keys():

if uc.unodes["msgfplus_C_mzid2csv_v2017_07_04"]["available"]:

uc.params[

"msgfplus_mzid_converter_version"

] = "msgfplus_C_mzid2csv_v2017_07_04"

mzML_file = os.path.join(params["ftp_output_folder"], params["ftp_include_ext"][0])

if os.path.exists(mzML_file) is False:

uc.fetch_file(engine="get_ftp_files_1_0_0")

if os.path.exists(params["database"]) is False:

uc.fetch_file(engine="get_http_files_1_0_0")

filtered_files_list = []

for engine in engine_list:

unified_result_file = uc.search(

input_file=mzML_file,

engine=engine,

)

validated_file = uc.validate(

input_file=unified_result_file,

engine="percolator_2_08",

)

filtered_file = uc.execute_misc_engine(

input_file=validated_file,

engine="filter_csv_1_0_0",

)

filtered_files_list.append(filtered_file)

uc.visualize(

input_files=filtered_files_list,

engine="venndiagram_1_1_0",

)

return

if __name__ == "__main__":

main()

Bruderer et al. (2015) X!Tandem comparison incl. machine ppm offset¶

-

xtandem_version_comparison_qexactive.main(folder)¶ Executes a search with 5 versions of X!Tandem on an example file from the data from Bruderer et al. 2015.

- usage:

- ./xtandem_version_comparison.py <folder containing B_D140314_SGSDSsample1_R01_MSG_T0.mzML>

This is a simple example file to show the straightforward comparison of different program versions of X!Tandem, similar to the example script ‘xtandem_version_comparison’, but analyzing high resolution data which can be better handled by version newer than Jackhammer. One gains approximately 10 percent more peptides with newer versions of X!Tandem.

Creates a Venn diagram with the peptides obtained by the different versions.

#!/usr/bin/env python3

# encoding: utf-8